What is Threat Intelligence & What are the Indicators of compromise ?

What is a Threat?

By definition, a threat is a potential danger for the enterprise assets that could harm these systems. In many cases, there is confusion between the three terms Threat, Vulnerability, and Risk; the first term, as I explained before, is a potential danger while a Vulnerability is a known weakness or a gap in an asset. A risk is a result of a threat exploiting a vulnerability. In other words, you can see it as an intersection between the two previous terms. The method used to attack an asset is called a Threat Vector.There are three main types of threats: * Natural threats * Unintentional threats * Intentional threats

What is an Advanced Persistent Threat (APT)?

Wikipedia defines an "Advanced Persistent Threat" as follows:

An advanced persistent threat is a stealthy computer network threat actor, typically a nation-state or state-sponsored group, which gains unauthorized access to a computer network and remains undetected for an extended period

To explore some APTs Check this great resource by FireEye

What is Threat Intelligence?

Cyber threat intelligence is information about threats and threat actors that helps mitigate harmful events in cyberspace. Cyber threat intelligence sources include open-source intelligence, social media intelligence, human intelligence, technical intelligence or intelligence from the deep and dark web

[Source: Wikipedia]

In other words, intelligence differs from data and information as completing the full picture.

Threat Intelligence goes through the following steps:

The questions that best drive the creation of actionable threat intelligence focus on a single fact, event, or activity — broad, open-ended questions should usually be avoided.

Prioritize your intelligence objectives based on factors like how closely they adhere to your organization’s core values, how big of an impact the resulting decision will have, and how time-sensitive the decision is.

One important guiding factor at this stage is understanding who will consume and benefit from the finished product — will the intelligence go to a team of analysts with technical expertise who need a quick report on a new exploit, or to an executive that’s looking for a broad overview of trends to inform their security investment decisions for the next quarter?

Threat data is usually thought of as lists of IoCs, such as malicious IP addresses, domains, and file hashes, but it can also include vulnerability information, such as the personally identifiable information of customers, raw code from paste sites, and text from news sources or social media.

Today, even small organizations collect data on the order of millions of log events and hundreds of thousands of indicators every day. It’s too much for human analysts to process efficiently — data collection and processing have to be automated to begin making any sense of it.

Solutions like SIEMs are a good place to start because they make it relatively easy to structure data with correlation rules that can be set up for a few different use cases, but they can only take in a limited number of data types.

If you’re collecting unstructured data from many different internal and external sources, you’ll need a more robust solution. Recorded Future uses machine learning and natural language processing to parse text from millions of unstructured documents across seven different languages and classify them using language-independent ontologies and events, enabling analysts to perform powerful and intuitive searches that go beyond bare keywords and simple correlation rules.

Threat intelligence can take many forms depending on the initial objectives and the intended audience, but the idea is to get the data into a format that the audience will understand. This can range from simple threat lists to peer-reviewed reports.

It also needs to be tracked so that there is continuity between one intelligence cycle and the next and the learning is not lost. Use ticketing systems that integrate with your other security systems to track each step of the intelligence cycle — each time a new intelligence request comes up, tickets can be submitted, written up, reviewed, and fulfilled by multiple people across different teams, all in one place.

In other words, intelligence differs from data and information as completing the full picture.

Threat Intelligence goes through the following steps:

- Planning and direction

- Collection

- Processing and exploitation

- Analysis and production

- Dissemination and integration

1. Planning and Direction

The first step to producing actionable threat intelligence is to ask the right question.The questions that best drive the creation of actionable threat intelligence focus on a single fact, event, or activity — broad, open-ended questions should usually be avoided.

Prioritize your intelligence objectives based on factors like how closely they adhere to your organization’s core values, how big of an impact the resulting decision will have, and how time-sensitive the decision is.

One important guiding factor at this stage is understanding who will consume and benefit from the finished product — will the intelligence go to a team of analysts with technical expertise who need a quick report on a new exploit, or to an executive that’s looking for a broad overview of trends to inform their security investment decisions for the next quarter?

2. Collection

The next step is to gather raw data that fulfills the requirements set in the first stage. It’s best to collect data from a wide range of sources — internal ones like network event logs and records of past incident responses, and external ones from the open web, the dark web, and technical sources.Threat data is usually thought of as lists of IoCs, such as malicious IP addresses, domains, and file hashes, but it can also include vulnerability information, such as the personally identifiable information of customers, raw code from paste sites, and text from news sources or social media.

3. Processing

Once all the raw data has been collected, you need to sort it, organizing it with metadata tags, and filtering out redundant information or false positives and negatives.Today, even small organizations collect data on the order of millions of log events and hundreds of thousands of indicators every day. It’s too much for human analysts to process efficiently — data collection and processing have to be automated to begin making any sense of it.

Solutions like SIEMs are a good place to start because they make it relatively easy to structure data with correlation rules that can be set up for a few different use cases, but they can only take in a limited number of data types.

If you’re collecting unstructured data from many different internal and external sources, you’ll need a more robust solution. Recorded Future uses machine learning and natural language processing to parse text from millions of unstructured documents across seven different languages and classify them using language-independent ontologies and events, enabling analysts to perform powerful and intuitive searches that go beyond bare keywords and simple correlation rules.

4. Analysis

The next step is to make sense of the processed data. The goal of the analysis is to search for potential security issues and notify the relevant teams in a format that fulfills the intelligence requirements outlined in the planning and direction stage.Threat intelligence can take many forms depending on the initial objectives and the intended audience, but the idea is to get the data into a format that the audience will understand. This can range from simple threat lists to peer-reviewed reports.

5. Dissemination

The finished product is then distributed to its intended consumers. For threat intelligence to be actionable, it has to get to the right people at the right time.It also needs to be tracked so that there is continuity between one intelligence cycle and the next and the learning is not lost. Use ticketing systems that integrate with your other security systems to track each step of the intelligence cycle — each time a new intelligence request comes up, tickets can be submitted, written up, reviewed, and fulfilled by multiple people across different teams, all in one place.

6. Feedback

The final step is when the intelligence cycle comes full circle, making it closely related to the initial planning and direction phase. After receiving the finished intelligence product, whoever made the initial request reviews it and determines whether their questions were answered. This drives the objectives and procedures of the next intelligence cycle, again making documentation and continuity essential.Where and when does Threat Hunting begin?

Let us try to figure out what the trigger for starting hypothesis testing in Threat Hunting is? Threat Hunting is based on Cyber Threat Intelligence, so one of the main triggers is information about other attacks or different intruders’ actions and techniques. Here is a good example – many of us increase information security awareness by reading and studying the latest information about cyber-attacks on specialized security websites and researchers' blogs. Such data can initiate a search for similar threats in the monitored system.Analysts must draw information from different sources and prioritize between them. It is necessary to check those hypotheses that lie outside the detection zone of security tools and can be used to carry out an attack. At the same time, the experience of a threat hunter and his personal rating of the danger of certain threats are of great importance.

Several sources from which Threat Hunting begins:

- Information about a vulnerability that is likely to be present in the monitored network

- Researching key business assets that attackers could target.

- External indicators of compromise - data on attacks on other organizations.

Also, we should not forget about new, previously unknown threats, information about which can also serve as a starting point for a search. It is also important to have a system of data compromise factors.

The most effective way to test Threat Hunting is to simulate an attack. Threat hunting detects less than 1% of incidents. However, you should not think that 1% is the coefficient for assessing its effectiveness since these information security events, which are not detected by information security tools, can be the most dangerous to your business.

As for the first steps that an organization should take to start Threat Hunting, experts note a basic assessment of risks and assets and the possible impact of potential attacks on them. It is important to understand what the company's critical assets are, where they are located, and what business processes are involved. The next step is collecting the maximum number of logs and metadata from monitored systems. Analysis of this information will provide the first hypotheses.

Threat Hunting perspectives

Attacks will become more and more targeted. It will be more difficult to learn about them using the Internet, so Threat Hunting will evolve. Hunting for threats will become one of the SOC processes; specialized platforms and instruments will appear that will automate the work of analysts and help them process large amounts of data.In the coming years, Threat Hunting may introduce profiles of organizations that take into account size, industry, and other factors and help build the threat detection process more efficiently.

Containerization and cloud computing will require new methods and approaches to the threat hunting process. Threat Hunting will increasingly go to the cloud as more and more enterprises use cloud technologies.

Some things will not change in the next three to five years. First, this is a shortage of personnel. Not all companies that want to hire a Threat Hunting expert will be able to do so. Therefore, outsourcing will proliferate in this segment, and it will be more automated. Again, Threat Hunting is impossible without a human.

What are the Indicators of compromise (IOCs)?

Indicators of compromise are pieces of information about a threat that can be used to detect intrusions such as MD5 hashed, URLs, IP addresses, and so on.These pieces can be shared across different organizations thanks to bodies like * Information Sharing and Analysis Centers (ISACs) * Computers emergency response teams (CERTs) * Malware Information Sharing Platform (MISP)

To facilitate the sharing/collecting/analyzing processes these IOCs usually respect and follow certain formats and protocols such as:

- OpenIOC

- Structured Threat Information eXpression (STIX)

- Trusted Automated Exchange of Intelligence Information (TAXII)

For example, this is the IOC STIX representation of Wannacry ransomware:

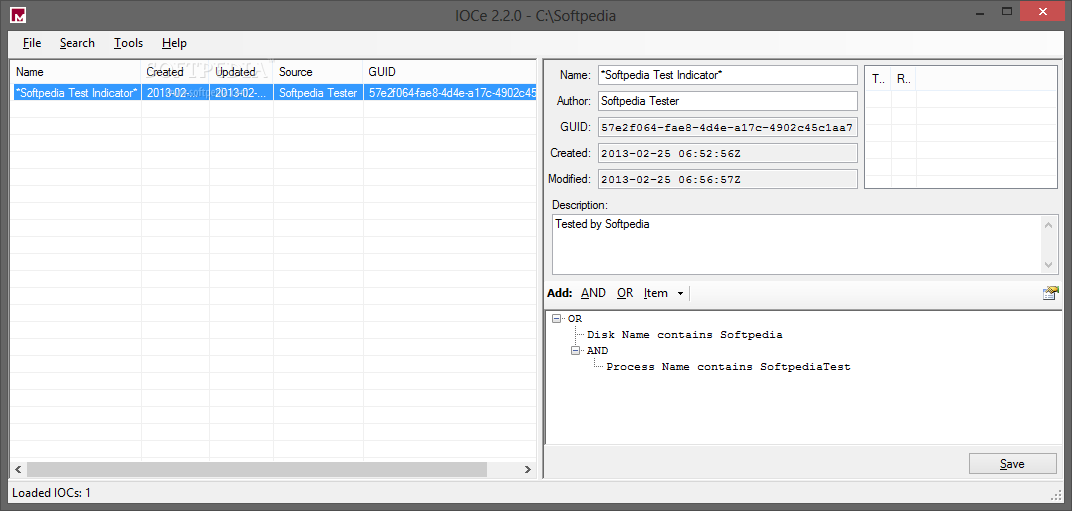

To help you create and edit your indicators of compromise you can use, for example, IOC editor by Fireeye. You can find it here: This is its user guide:

You can simply create your Indicators of compromise using a graphical interface:

It gives you also the ability to compare IOCs

A Definition OF Indicators Of Compromise

Indicators of compromise (IOCs) are “pieces of forensic data, such as data found in system log entries or files, that identify potentially malicious activity on a system or network.” Indicators of compromise aid information security and IT professionals in detecting data breaches, malware infections, or other threat activity. By monitoring for indicators of compromise, organizations can detect attacks and act quickly to prevent breaches from occurring or limit damages by stopping attacks in earlier stages.Indicators of compromise act as breadcrumbs that lead infosec and IT pros to detect malicious activity early in the attack sequence. These unusual activities are the red flags that indicate a potential or in-progress attack that could lead to a data breach or systems compromise. But, IOCs are not always easy to detect; they can be as simple as metadata elements or incredibly complex malicious code and content samples. Analysts often identify various IOCs to look for correlations and piece them together to analyze a potential threat or incident.

Indicators Of Compromise VS. Indicators Of Attack

Indicators of attack are similar to IOCs, but rather than focusing on forensic analysis of a compromise that has already taken place, indicators of attack focus on identifying attacker activity while an attack is in process. Indicators of compromise help answer the question “What happened?” while indicators of attack can help answer questions like “What is happening and why?” A proactive approach to detection uses both IOAs and IOCs to discover security incidents or threats as close to real-time as possible.Examples Of Indicators Of Compromise

There are several indicators of compromise that organizations should monitor. The highlights 15 key indicators of compromise:- Unusual Outbound Network Traffic

- Anomalies in Privileged User Account Activity

- Geographical Irregularities

- Log-In Red Flags

- Increases in Database Read Volume

- HTML Response Sizes

- Large Numbers of Requests for the Same File

- Mismatched Port-Application Traffic

- Suspicious Registry or System File Changes

- Unusual DNS Requests

- Unexpected Patching of Systems

- Mobile Device Profile Changes

- Bundles of Data in the Wrong Place

- Web Traffic with Unhuman Behavior

- Signs of DDoS Activity

Using Indicators Of Compromise To Improve Detection And Response

Monitoring for indicators of compromise enables organizations to better detect and respond to security compromises. Collecting and correlating IOCs in real-time means that organizations can more quickly identify security incidents that may have gone undetected by other tools and provides the necessary resources to perform forensic analysis of incidents. If security teams discover recurrence or patterns of specific IOCs they can update their security tools and policies to protect against future attacks as well.There is a push for organizations to report these analysis results in a consistent, well-structured manner to help companies and IT professionals automate the processes used in detecting, preventing, and reporting security incidents. Some in the industry argue that documenting IOCs and threats helps organizations and individuals share information among the IT community as well as improve incident response and computer forensics. The OpenIOC framework is one way to consistently describe the results of malware analysis. Other groups such as STIX and TAXII are making efforts to standardize IOC documentation and reporting.

Indicators of compromise are an important component in the battle against malware and cyberattacks. While they are reactive in nature, organizations that monitor for IOCs diligently and keep up with the latest IOC discoveries and reporting can improve detection rates and response times significantly.

Post a Comment