A Deep Dive Understanding of Smart Contract Vulnerabilities - Part 1

This article serves as a mini-course on smart contract security and provides an extensive list of the issues and vulnerabilities that tend to recur in Solidity smart contracts.

A security issue in Solidity boils down to smart contracts not behaving the way they were intended to. This can fall into four broad categories:

- Funds getting stolen

- Funds getting locked up or frozen inside a contract

- People receive less rewards than anticipated (rewards are delayed or reduced)

- People receive more rewards than anticipated (leading to inflation and devaluation)

It isn’t possible to make a comprehensive list of everything that can go wrong. However, just as traditional software engineering has common themes of vulnerabilities such as SQL injection, buffer overruns, and cross-site scripting, smart contracts have recurring anti-patterns that can be documented.

Smart contract hacks and vulnerabilities

Think of this guide as more of a reference. It isn’t possible to discuss every concept in detail without turning this into a book (fair warning: this article is 10k+ words long, so feel free to bookmark it and read it in chunks). However, it serves as a list of what to look out for and what to study. If a topic feels unfamiliar, that should serve as an indicator that it is worth putting time into practicing identifying that class of vulnerability.

Prerequisites

This article assumes basic proficiency in Solidity. If you are new to Solidity, please see our free Solidity tutorial.

Reentrancy

We’ve written extensively on smart contract reentrancy so we won’t repeat it here. But here is a quick summary:

Whenever a smart contract calls the function of another smart contract, sends Ether to it, or transfers a token to it, then there is a possibility of re-entrancy.

- When Ether is transferred, the receiving contract’s fallback or receive function is called. This hands control over to the receiver.

- Some token protocols alert the receiving smart contract that they have received the token by calling a predetermined function. This hands the control flow over to that function.

- When an attacking contract receives control, it doesn’t have to call the same function that handed over control. It could call a different function in the victim smart contract (cross-function reentrancy) or even a different contract (cross-contract reentrancy)

- Read-only reentrancy happens when a view function is accessed while the contract is in an intermediate state.

Despite reentrancy likely being the most well-known smart contract vulnerability, it only makes up a small percentage of hacks that happen in the wild. Security researcher Pascal Caversaccio (pcaveraccio) keeps an up-to-date GitHub list of reentrancy attacks. As of April 2023, 46 reentrancy attacks have been documented in that repository.

Access Control

It seems like a simple mistake, but forgetting to place restrictions on who can call a sensitive function (like withdrawing ether or changing ownership) happens surprisingly often.

Even if a modifier is in place, there have been cases where the modifier was not implemented correctly, such as in the example below where the required statement is missing.

// DO NOT USE!

modifier onlyMinter {

minters[msg.sender] == true

_;

}This above code is a real example from this audit: https://code4rena.com/reports/2023-01-rabbithole/#h-01-bad-implementation-in-minter-access-control-for-rabbitholereceipt-and-rabbitholetickets-contracts

Here is another way access control can go wrong

function claimAirdrop(bytes32 calldata proof[]) {

bool verified = MerkleProof.verifyCalldata(proof, merkleRoot, keccak256(abi.encode(msg.sender)));

require(verified, "not verified");

require(!alreadyClaimed[msg.sender], "already claimed");

_transfer(msg.sender, AIRDROP_AMOUNT);

}In this case, “alreadyClaimed” is never set to true, so the claimant can issue a call to the function multiple times.

Real-life example: Trader bot exploited

A fairly recent example of insufficient access control was an unprotected function to receive flash loans by a trading bot (which went by the name 0xbad, as the address started with that sequence). It racked up over a million dollars in profit until one day an attacker noticed any address could call the flashloan receive function, not just the flashloan provider.

As is usually the case with trading bots, the smart contract code to execute the trades was not verified, but the attacker discovered the weakness anyway. More info in the rekt news coverage.

Improper Input Validation

If access control is about controlling who calls a function, input validation is about controlling what they call the contract with.

This usually comes down to forgetting to put the proper required statements in place.

Here is a rudimentary example

contract UnsafeBank {

mapping(address => uint256) public balances;

// allow depositing on other's behalf

function deposit(address for) public payable {

balances += msg.value;

}

function withdraw(address from, uint256 amount) public {

require(balances[from] <= amount, "insufficient balance");

balances[from] -= amount;

msg.sender.call{value: amout}("");

}

}

The contract above does check that you aren’t withdrawing more than you have in your account, but it doesn’t stop you from withdrawing from an arbitrary account.

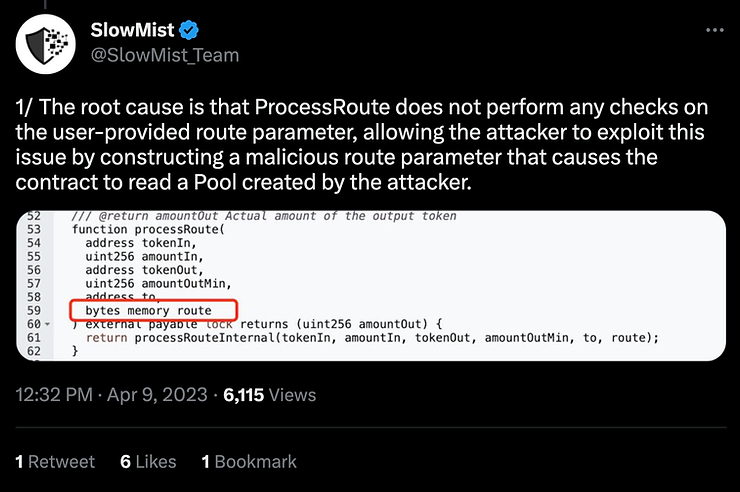

Real-life example: Sushiswap

Sushiswap experienced a hack of this type due to one of the parameters of an external function not being sanitized.

What is the difference between improper access control and improper input validation?

Improper access control means msg.sender does not have adequate restrictions. Improper input validation means the arguments to the function are not sufficiently sanitized. There is also an inverse to this anti-pattern: placing too much restriction on a function call.

Excessive Function Restriction

Excessive validation probably means funds won’t get stolen, but it could mean funds get locked into the contract. Having too many safeguards in place is not a good thing either.

Real life example: Akutars NFT

One of the most high-profile incidents was the Akutars NFT which ended up with 34 million dollars worth of ETH stuck inside the smart contract and unwithdrawable.

The contract had a well-intentioned mechanism to prevent the owner of the contract from withdrawing until all refunds from paying above the Dutch auction price had been given. However, due to a bug documented in the Twitter thread linked below, the owner was unable to withdraw the funds.

Getting the balance right

Sushiswap gave too much power to untrusted users, and the Akutars NFT gave too little power to the admin. When designing smart contracts, a subjective judgement about how much liberty each class of users must be made, and this decision cannot be left to automated testing and tooling. There are significant tradeoffs with decentralization, security, and UX that must be considered.

For the smart contract programmer, explicitly writing out what users should and should not be able to do with certain functions is an important part of the development process.

We will revisit the topic of overpowered admins later.

Security often boils down to managing the way money exits the contract

As stated in the introduction, there are four primary ways smart contracts get hacked:

- Money stolen

- Money frozen

- Insufficient rewards

- Excessive rewards

“Money” here means anything of value, such as tokens, not just cryptocurrency. When coding or auditing a smart contract, the developer must be conscientious of the intended ways value is to flow in and out of the contract. The issues listed above are the primary ways smart contracts get hacked, but there are a lot of other root causes that can cascade into major issues, which are documented below.

Double voting or msg.sender spoofing

Using vanilla ERC20 tokens or NFTs as tickets to weigh votes is unsafe because attackers can vote with one address, transfer the tokens to another address, and vote again from that address.

Here is a minimal example

// A malicious voter can simply transfer their tokens to

// another address and vote again.

contract UnsafeBallot {

uint256 public proposal1VoteCount;

uint256 public proposal2VoteCount;

IERC20 immutable private governanceToken;

constructor(IERC20 _governanceToken) {

governanceToken = _governanceToken;

}

function voteFor1() external notAlreadyVoted {

proposal1VoteCount += governanceToken.balanceOf(msg.sender);

}

function voteFor2() external notAlreadyVoted {

proposal2VoteCount += governanceToken.balanceOf(msg.sender);

}

// prevent the same address from voting twice,

// however the attacker can simply

// transfer to a new address

modifier notAlreadyVoted {

require(!alreadyVoted[msg.sender], "already voted");

_;

alreadyVoted[msg.sender] = true;

}

}

To prevent this attack, ERC20 Snapshot or ERC20 Votes should be used. By snapshotting a point of time in the past, the current token balances cannot be manipulated to gain illicit voting power.

Flashloan Governance Attacks

However, using an ERC20 token with a snapshot or vote capability doesn’t fully solve the problem if someone can take a flashloan to temporarily increase their balance, and then take a snapshot of their balance in the same transaction. If that snapshot is used for voting, they will have an unreasonably large amount of votes at their disposal.

A flashloan lends a large amount of Ether or token to an address but reverts if the money is not repaid in the same transaction.

contract SimpleFlashloan {

function borrowERC20Tokens() public {

uint256 before = token.balanceOf(address(this));

// send tokens to the borrower

token.transfer(msg.sender, amount);

// hand control back to the borrower to

// let them do something

IBorrower(msg.sender).onFlashLoan();

// require that the tokens got returned

require(token.balanceOf(address(this) >= before);

}

}

An attacker can use a flashloan to suddenly gain a lot of votes to swing proposals in their favor and/or do something malicious.

Flashloan Price Attacks

This is arguably the most common (or at least most high-profile) attack on DeFi, accounting for hundreds of millions of dollars lost. Here is a list of high-profile ones.

The price of an asset on the blockchain is often calculated as the current exchange rate between assets. For example, if a contract is currently trading 1 USDC for 100 k9coin, then you could say k9coin has a price of 0.01 USDC. However, prices generally move in response to buying and selling pressure, and flash loans can create massive buying and selling pressure.

When querying another smart contract about the price of an asset, the developer needs to be very careful because they are assuming the smart contract they are calling is immune to flash loan manipulation.

Bypassing the contract check

You can “check” if an address is a smart contract by looking at its bytecode size. Externally owned accounts (regular wallets) don’t have any bytecode. Here are a few ways of doing it

import "@openzeppelin/contracts/utils/Address.sol"

contract CheckIfContract {

using Address for address;

function addressIsContractV1(address _a) {

return _a.code.length != 0;

}

function addressIsContractV2(address _a) {

// use the openzeppelin libraryreturn _a.isContract();

}

}

However, this has a few limitations

If a contract makes an external call from a constructor, then it’s apparent bytecode size will be zero because the smart contract deployment code hasn’t returned the runtime code yet

The space might be empty now, but an attacker might know they can deploy a smart contract there in the future using create2

In general, checking if an address is a contract is usually (but not always) an antipattern. Multisignature wallets are smart contracts themselves, and doing anything that might break multisignature wallets breaks composability.

The exception to this is checking if the target is a smart contract before calling a transfer hook. More on this later.

tx.origin

There is rarely a good reason to use tx.origin. If tx.origin is used to identify the sender, then a man-in-the-middle attack is possible. If the user is tricked into calling a malicious smart contract, then the smart contract can use all the authority tx.origin has to wreak havoc.

Consider this following exercise and the comments above the code.

contract Phish {

function phishingFunction() public {

// this fails, because this contract does not have approval/allowance

token.transferFrom(msg.sender, address(this), token.balanceOf(msg.sender));

// this also fails, because this creates approval for the contract,// not the wallet calling this phishing function

token.approve(address(this), type(uint256).max);

}

}

This does not mean you are safe calling arbitrary smart contracts. But there is a layer of safety built into most protocols that will be bypassed if tx.origin is used for authentication.

Sometimes, you might see code that looks like this:

require(msg.sender == tx.origin, "no contracts");When a smart contract calls another smart contract, msg.sender will be the smart contract and tx.origin will be the user’s wallet, thus giving a reliable indication that the incoming call is from a smart contract. This is true even if the call happens from the constructor.

Most of the time, this design pattern not a good idea. Multisignature wallets and Wallets from EIP 4337 won’t be able to interact with a function that has this code. This pattern can commonly be seen in NFT mints, where it’s reasonable to expect most users are using a traditional wallet. But as account abstraction becomes more popular, this pattern will hinder more than it helps.

Gas Griefing or Denial of Service

A griefing attack means the hacker is trying to "cause grief" for other people, even if they don't gain economically from doing so.

A smart contract can maliciously use up all the gas forwarded to it by going into an infinite loop. Consider the following example:

contract Mal { fallback() external payable { // infinite loop uses up all the gas while (true) { } } }

If another contract distributes ether to a list of addresses such as follows:

contract Distribute {

funtion distribute(uint256 total) public nonReentrant {

for (uint i; i < addresses.length; ) {

(bool ok, ) addresses.call{value: total / addresses.length}("");

// ignore ok, if it reverts we move on

// traditional gas saving trick for for loops

unchecked {

++i;

}

}

}

}

Then the function will revert when it sends ether to Mal. The call in the code above forwards 63 / 64 of the gas available (read more about this rule in our article on EIP 150), so there likely won’t be enough gas to complete the operation with only 1/64 of the gas left.

A smart contract can return a large memory array that consumes a lot of gas

Consider the following example

function largeReturn() public {

// result might be extremely long!

(book ok, bytes memory result) =

otherContract.call(abi.encodeWithSignature("foo()"));

require(ok, "call failed");

}

Memory arrays use up quadratic amount of gas after 724 bytes, so a carefully chosen return data size can grief the caller.

Even if the variable result is not used, it is still copied to memory. If you want to restrict the return size to a certain amount, you can use assembly

function largeReturn() public {

assembly {

let ok := call(gas(), destinationAddress, value, dataOffset, dataSize, 0x00, 0x00);

// nothing is copied to memory until you

// use returndatacopy()

}

}Deleting arrays that others can add to is also an denial of service vector

Although erasing storage is a gas-efficient operation, it still has a net cost. If an array becomes too long, it becomes impossible to delete. Here is a minimal example

contract VulnerableArray {

address[] public stuff;

function addSomething(address something) public {

stuff.push(something);

}

// if stuff is too long, this will become undeletable due to

// the gas cost

function deleteEverything() public onlyOwner {

delete stuff;

}

}ERC777, ERC721, and ERC1155 can also be griefing vectors

If a smart contract transfers tokens that have transfer hooks, an attacker can set up a contract that does not accept the token (it either does not have an onReceive function or programs the function to revert). This will make the token untransferable and cause the entire transaction to revert.

Before using safeTransfer or transfer, consider the possibility that the receiver might force the transaction to revert.

contract Mal is IERC721Receiver, IERC1155Receiver, IERC777Receiver {

// this will intercept any transfer hook

fallback() external payable {

// infinite loop uses up all the gaswhile (true) {

}

}

// we could also selectively deny transactions

function onERC721Received(address operator,

address from,

uint256 tokenId,

bytes calldata data

) external returns (bytes4) {

if (wakeUpChooseViolence()) {

revert();

}

else {

return IERC721Receiver.onERC721Received.selector;

}

}

}Insecure Randomness

It is currently not possible to generate randomness securely with a single transaction on the blockchain. Blockchains need to be fully deterministic, otherwise distributed nodes would not be able to reach a consensus about the state. Because they are fully deterministic, any “random” number can be predicted. The following dice-rolling function can be exploited.

contract UnsafeDice {

function randomness() internal returns (uint256) {

return keccak256(abi.encode(msg.sender, tx.origin, block.timestamp, tx.gasprice, blockhash(block.number - 1);

}

// our dice can land on one of {0,1,2,3,4,5}function rollDice() public payable {

require(msg.value == 1 ether);

if (randomness() % 6) == 5) {

msg.sender.call{value: 2 ether}("");

}

}

}

contract ExploitDice {

function randomness() internal returns (uint256) {

return keccak256(abi.encode(msg.sender, tx.origin, block.timestamp, tx.gasprice, blockhash(block.number - 1);

}

function betSafely(IUnsafeDice game) public payable {

if (randomness % 6) == 5)) {

game.betSafely{value: 1 ether}()

}

// else don't do anything

}

}

It doesn’t matter how you generate randomness because an attacker can replicate it exactly. Throwing in more sources of “entropy” such as the msg.sender, timestamp, etc won’t have any effect because the smart contract can measure it too.

Using the Chainlink Randomness Oracle Wrong

Chainlink is a popular solution to get secure random numbers. It does it in two steps. First, the smart contracts sends a randomness request to the oracle, then some blocks later, the oracle responds with a random number.

Since an attacker can’t predict the future, they can’t predict the random number. Unless the smart contract uses the oracle wrong.

- The smart contract requesting randomness must not do anything until the random number is returned. Otherwise, an attacker can monitor the mempool for the oracle returning the randomness and frontrun the oracle, knowing what the random number will be.

- The randomness oracles themselves might try to manipulate your application. They cannot pick random numbers without consensus from other nodes, but they can withhold and re-order random numbers if your application requests several at the same time.

- Finality is not instant on Ethereum or most other EVM chains. Just because some block is the most recent one, it doesn’t mean it won’t necessarily stay that way. This is called a “chain re-org”. In fact, the chain can alter more than just the final block. This is called the “re-org depth.” Etherscan reports re-orgs for various chains, for example Ethereum reorgs and Polygon reorgs. Reorgs can be as deep as 30 or more blocks on Polygon, so waiting fewer blocks can make the application vulnerable (this may change when the zk-evm becomes the standard consensus on Polygon, because the finality will match Ethereum’s but this is a future prediction, not a fact about the present).

Here are the other chainlink randomness security considerations.

Getting stale data from a price Oracle

There is no SLA (service level agreement) for Chainlink to keep it’s price oracles up to date within a certain time frame. When the chain is severely congested (such as when the Yuga Labs Otherside mint overwhelmed Ethereum to the point of no transactions going through), the price updates might be delayed.

A smart contract that uses a price oracle must explicitly check the data is not stale, I.e. has been updated recently within some threshold. Otherwise, it cannot make a reliable decision concerning prices.

There is an added complication that if the price doesn’t change past a deviation threshold, the oracle might not update the price to save gas, so this could affect what time threshold is considered “stale.”

It is important to understand the SLA of an oracle a smart contract relies on.

Relying on only one oracle

No matter how secure an oracle seems, an attack may be discovered in the future. The only defence against this is to use multiple independent oracles.

Oracles in general are hard to get right

The blockchain can be quite secure, but putting data onto the chain in the first place necessitates some kind of off-chain operation which forgoes all the security guarantees blockchains provide. Even if oracles remain honest, their source of data can be manipulated. For example, an oracle can reliably report prices from a centralized exchange, but those can be manipulated with large buy and sell orders. Similarly, oracles that depend on sensor data or some web2 API are subject to traditional hacking vectors.

A good smart contract architecture avoids the use of oracles altogether where possible.

Mixed accounting

Consider the following contract

contract MixedAccounting {

uint256 myBalance;

function deposit() public payable {

myBalance = myBalance + msg.value;

}

function myBalanceIntrospect() public view returns (uint256) {

return address(this).balance;

}

function myBalanceVariable() public view returns (uint256) {

return myBalance;

}

function notAlwaysTrue() public view returns (bool) {

return myBalanceIntrospect() == myBalanceVariable();

}

}

The contract above does not have a receive or fallback function, so directly transferring Ether to it will revert. However, a contract can forcefully send Ether to it with selfdestruct. In that case, myBalanceIntrospect() will be greater than myBalanceVariable(). Ether accounting method is fine, but if you use both, then the contract may have inconsistent behaviour.

The same applies for ERC20 tokens.

contract MixedAccountingERC20 {

IERC20 token;

uint256 myTokenBalance;

function deposit(uint256 amount) public {

token.transferFrom(msg.sender, address(this), amount);

myTokenBalance = myTokenBalance + amount;

}

function myBalanceIntrospect() public view returns (uint256) {

return token.balanceOf(address(this));

}

function myBalanceVariable() public view returns (uint256) {

return myTokenBalance;

}

function notAlwaysTrue() public view returns (bool) {

return myBalanceIntrospect() == myBalanceVariable();

}

}

Again we cannot assume that myBalanceIntrospect() and myBalanceVariable() will always return the same value. It is possible to directly transfer ERC20 tokens to

MixedAccountingERC20, bypassing the deposit function and not updating the myTokenBalance variable.

When checking the balances with introspection, strict using equality checks should be avoided as the balance can be changed by an outsider at will.

Treating cryptographic proofs like passwords

This isn’t a quirk of Solidity, more of a common misunderstanding among developers about how to use cryptography to give addresses special privileges. The following code is insecure

contract InsecureMerkleRoot {

bytes32 merkleRoot;

function airdrop(bytes[] calldata proof, bytes32 leaf) external {

require(MerkleProof.verifyCalldata(proof, merkleRoot, leaf), "not verified");

require(!alreadyClaimed[leaf], "already claimed airdrop");

alreadyClaimed[leaf] = true;

mint(msg.sender, AIRDROP_AMOUNT);

}

}

This code is insecure for three reasons:

- Anyone who knows the addresses that are selected for the airdrop can recreate the merkle tree and create a valid proof.

- The leaf isn’t hashed. An attacker can submit a leaf that equals the merkle root and bypass the require statement.

- Even if the above two issues are fixed, once someone submits valid proof, they can be frontrun.

Cryptographic proofs (merkle trees, signatures, etc) need to be tied to msg.sender, which an attacker cannot manipulate without acquiring the private key.

Solidity does not upcast to the final uint size

function limitedMultiply(uint8 a, uint8 b) public pure returns (uint256 product) {

product = a * b;

}

Although the product is a uint256 variable, the multiplication result cannot be larger than 255 or the code will revert.

This issue can be mitigated by individually upcasting each variable.

function unlimitedMultiply(uint8 a, uint8 b) public pure returns (uint256 product) {

product = uint256(a) * uint256(b);

}

A situation like this can occur if multiplying integers is packed in a struct. You should be mindful of this when multiplying small values that were packed in a struct

struct Packed {

uint8 time;

uint16 rewardRate

}

//...

Packed p;

p.time * p.rewardRate; // this might revert!Solidity sneakily makes some literals uint8

The following code will revert because the ternary operator here returns a uint8.

function result(bool inp) external pure returns (uint256) {

return uint256(255) + (inp ? 1 : 0);

}To make it not revert, do the following:

function result(bool inp) external pure returns (uint256) {

return uint256(255) + (inp ? uint256(1) : uint256(0));

}You can learn more about this phenomenon in this tweet thread.

Solidity downcasting does not revert on overflow

Solidity does not check if it is safe to cast an integer to a smaller one. Unless some business logic ensures that the downcasting is safe, a library like SafeCast should be used.

function test(int256 value) public pure returns (int8) {

return int8(value + 1); // overflows and does not revert

} Writes to storage pointers don’t save new data.

The code looks like it copies the data in myArray[1] to myArray[0], but it doesn’t. If you comment out the final line in the function, the compiler will say the function should be turned to a view function. The write to foo doesn’t write to the underlying storage.

contract DoesNotWrite {

struct Foo {

uint256 bar;

}

Foo[] public myArray;

function moveToSlot0() external {

Foo storage foo = myArray[0];

foo = myArray[1]; // myArray[0] is unchanged

// we do this to make the function a state

// changing operation

// and silence the compiler warning

myArray[1] = Foo({bar: 100});

}

}

So don’t write to storage pointers.

Deleting structs that contain dynamic datatypes does not delete the dynamic data

If a mapping (or dynamic array) is inside a struct, and the struct is deleted, the mapping or array will not be deleted.

With the exception of deleting an array, the delete keyword can only delete one storage slot. If the storage slot contains references to other storage slots, those won’t be deleted.

contract NestedDelete {

mapping(uint256 => Foo) buzz;

struct Foo {

mapping(uint256 => uint256) bar;

}

Foo foo;

function addToFoo(uint256 i) external {

buzz[i].bar[5] = 6;

}

function getFromFoo(uint256 i) external view returns (uint256) {

return buzz[i].bar[5];

}

function deleteFoo(uint256 i) external {

// internal map still holds the data in the

// mapping and array

delete buzz[i];

}

}

Now let’s do the following transaction sequence

- addToFoo(1)

- getFromFoo(1) returns 6

- deleteFoo(1)

- getFromFoo(1) still returns 6!

Remember, maps are never “empty” in Solidity. So if someone accesses an item which has been deleted, the transaction will not revert but instead return the zero value for that datatype.

ERC20 Token Issues

If you only deal with trusted ERC20 tokens, most of these issues do not apply. However, when interacting with an arbitrary or partially untrusted ERC20 token, here are some things to watch out for.

ERC20: Fee on transfer

When dealing with untrusted tokens, you shouldn’t assume that your balance necessarily increases by the amount. It is possible for an ERC20 token to implement it’s transfer function as follows:

contract ERC20 {

// internally called by transfer() and transferFrom()

// balance and approval checks happen in the caller

function _transfer(address from, address to, uint256 amount) internal returns (bool) {

fee = amount * 100 / 99;

balanceOf[from] -= to;

balanceOf[to] += (amount - fee);

balanceOf[TREASURY] += fee;

emit Transfer(msg.sender, to, (amount - fee));

return true;

}

}This token applies a 1% tax to every transaction. So if a smart contract interacts with the token as follows, we will either get unexpected reverts or stolen money.

contract Stake {

mapping(address => uint256) public balancesInContract;

function stake(uint256 amount) public {

token.transferFrom(msg.sender, address(this), amount);

balancesInContract[msg.sender] += amount; // THIS IS WRONG!

}

function unstake() public {

uint256 toSend = balancesInContract[msg.sender];

delete balancesInContract[msg.sender];

// this could revert because toSend is 1% greater than// the amount in the contract. Otherwise, 1% will be "stolen"// from other depositors.

token.transfer(msg.sender, toSend);

}

}ERC20: rebasing tokens

The rebasing token was popularized by Olympus DAO’s sOhm token and Ampleforth’s AMPL token. Coingecko maintains a list of rebasing ERC20 tokens.

When a token rebases, the total supply changes and everyone’s balance increases or decreases depending on the rebase direction.

The following code is likely to break when dealing with a rebasing token

contract WillBreak {

mapping(address => uint256) public balanceHeld;

IERC20 private rebasingToken

function deposit(uint256 amount) external {

balanceHeld[msg.sender] = amount;

rebasingToken.transferFrom(msg.sender, address(this), amount);

}

function withdraw() external {

amount = balanceHeld[msg.sender];

delete balanceHeld[msg.sender];

// ERROR, amount might exceed the amount

// actually held by the contract

rebasingToken.transfer(msg.sender, amount);

}

}

The solution of many contracts is to simply disallow rebasing tokens. However, one could modify the code above to check balanceOf(address(this)) before transferring the account balance to the sender. Then it would still work even if the balance changes.

ERC20: ERC777 in ERC20 clothing

ERC20, if implemented according to the standard, ERC20 tokens do not have transfer hooks, and thus transfer and transferFrom do not have a reentrancy issue.

There are meaningful advantages to tokens with transfer hooks, which is why all NFT standards implement them, and why ERC777 was finalized. However, it’s caused enough confusion that Openzeppelin deprecated the ERC777 library.

If you want your protocol to be compatible with tokens that behave like ERC20 tokens but have transfer hooks, then it’s a simple matter of treating the transfer of the functions and transferFrom like they will issue a function call to the receiver.

This ERC777 re-entrancy happened to Uniswap (Openzeppelin documented the exploit here if you are curious).

ERC20: Not all ERC20 tokens return true

The ERC20 specification dictates that an ERC20 token must return true when a transfer succeeds. Because most ERC20 implementations cannot fail unless the allowance is insufficient or the amount transferred is too much, most devs have become accustomed to ignoring the return value of ERC20 tokens and assuming a failed trasfer will revert.

Frankly, this is not consequential if you are only working with a trusted ERC20 token you know the behavior of. But when dealing with arbitrary ERC20 tokens, this variance in behavior must be accounted for.

There is an implicit expectation in many contracts that failed transfers should always revert, not return false because most ERC20 tokens don’t have a mechanism to return false, so this has lead to a lot of confusion.

Further complicating this matter is that some ERC20 tokens don’t follow the protocol of returning true, notably Tether. Some tokens revert on a failure to transfer, which will cause the revert to bubble up to the caller. Thus, some libraries wrap ERC20 token transfer calls to intercept the revert and return a boolean instead. Here are some implementations

Openzeppelin SafeTransfer

Solady SafeTransfer (considerably more gas efficient)

ERC20: Address Poisoning

This is not a smart contract vulnerability, but we mention it here for completeness.

Transferring zero ERC20 tokens is permitted by the specification. This can lead to confusion for frontend applications, and possibly trick users about who they recently sent tokens to. Metamask has more on that in this thread.

ERC20: Just flat out rugged

(In web3 parlance “rugged” means “having the rug pulled out from under you.”)

There’s nothing stopping someone from adding a function to an ERC20 token that lets them create, transfer, and burn tokens at will — or selfdestructing or upgrading. So fundamentally, there is a limit to how “untrusted” an ERC20 token can be.

Source :- https://www.rareskills.io/post/smart-contract-security

Post a Comment